Is Your SEO Strategy Ready for Personalized AI Answers? (Probably Not.)

When Every User Gets a Different Answer, What Is SEO Optimizing For?

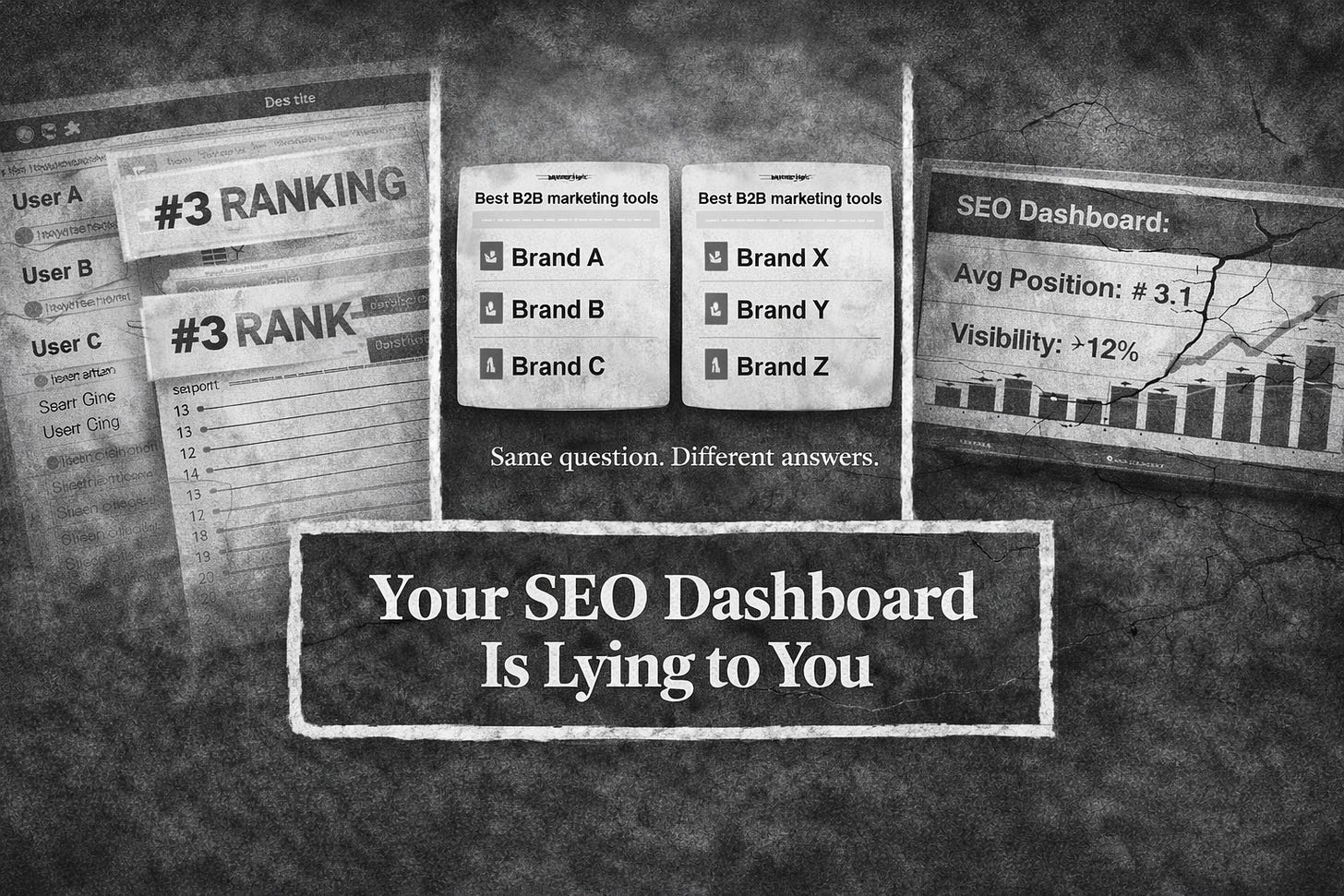

You know what’s breaking right now? The entire concept of “ranking.”

Not slowly. Not “oh we’ll adapt over time.” It’s already broken, and most SEO teams haven’t noticed yet because their dashboards still show pretty green lines going up.

Here’s the problem: you’re measuring success by position #3 for a keyword, but there is no single #3 anymore. There are hundreds of them. Maybe thousands. Each one tailored to a different user, a different context, a different conversation history.

Ask ChatGPT or Perplexity for B2B marketing tools for a small business right now. Then ask your colleague to do the same thing. Different answers. Not slightly different but materially different brands, different reasoning, different “winners.”

Same question. Same model. Totally different outputs.

So when you report “we rank #3,” what does that even mean? #3 for who? In what context? With what user history? The metric you’re optimizing for doesn’t correspond to any single reality anymore.

And that’s just the start of the problem.

This week we’re breaking down why AI answers differ between users, what’s actually personalization versus normal system chaos (they’re not the same thing), and what this means for how you need to think about SEO in 2026.

It gets technical in spots, but every bit of this matters for your day-to-day work.

Stop Confusing Two Completely Different Things

When people see different AI answers, they usually just shrug and say “oh, personalization.” But that’s lazy thinking. There are actually two separate phenomena happening here, and mixing them up will wreck your strategy.

True Personalization (The System Knows You)

This is when the AI intentionally adapts based on signals about you specifically.

OpenAI lets you set custom instructions like tone, format, response style. You tell it “be concise” or “use bullet points” and it remembers. Perplexity goes further, letting you fill out an actual profile with your bio, interests, even your location for better local results. The systems are literally conditioning outputs based on what they know about you as an individual user.

And then there’s memory. If you’ve enabled it (and honestly, most people have without realizing it), ChatGPT is remembering facts across conversations. “I run a SaaS agency.” “I’m vegetarian.” “I prefer short answers.” All that context stacks up and influences future responses.

This is real personalization. The system is using data about you to change what it says.

System Variability That Just Looks Like Personalization

But here’s what most people miss: even with zero user profile, answers can still differ wildly.

The same prompt can generate different outputs because of non-determinism in how these models run inference. Researchers have documented this—you can feed identical inputs and get materially different results just from normal system-level factors.

Then there’s retrieval. In RAG systems (Retrieval-Augmented Generation, the thing that lets AI pull from the web), what documents get fetched can vary by time, region, how the index is feeling that day, or backend changes nobody tells you about. Unless the system is explicitly using your profile to personalize retrieval, this variability has nothing to do with you. It’s just the system being inconsistent.

And don’t even get me started on model updates, A/B tests, and APIs. You and I could be on completely different model versions right now and we’d never know. Vendors ship changes constantly. Two users in different experimental cohorts see different outputs, and that gets blamed on “personalization” when it’s really just the platform testing new stuff.

Also, you may don’t know but what you get from apps like Claude or ChatGPT could be totally different from the results you get when you ask the exact same question using their API. That’s a crucial thing many people might overlook.

So, what you’re actually experiencing most of the time? A messy blend of some deliberate personalization plus a lot of ordinary system chaos.

How Personalization Actually Happens (When It Does)

Let me walk through the four ways these systems genuinely personalize, because understanding the mechanics changes how you think about optimization.

1. Your Style Preferences Change What Gets Surfaced

Most platforms let you set how you want responses formatted. Seems simple, right? But this doesn’t just change presentation, it also changes content selection too, because the model starts prioritizing different trade-offs based on your settings.

If you’ve told ChatGPT you want concise answers, it’s going to lean toward sources that are easier to summarize. If you prefer detailed explanations, it’ll pull from longer-form content. Same question, different emphasis, different sources highlighted.

For SEO, this means visibility isn’t just about being relevant, it’s about being packagable in multiple formats. Dense academic papers might win for some users. Snackable comparison guides might win for others. You need both.

2. Memory Creates Brand Familiarity Loops

The memory feature is where things get interesting. And by interesting, I mean potentially problematic for brands trying to break in.

When the system remembers context across sessions, it can develop preferences based on what worked before. If a user accepted a recommendation last time, that brand gets weighted more heavily next time. If they engaged with certain sources, those sources become the default.

OpenAI’s own documentation talks about this as a feature, which means: less repetition, more relevant answers, better user experience. And sure, that’s true from the user’s perspective. But from a brand visibility perspective ? you’re looking at path dependency.

Get in early, stay in. Get left out early, fight like hell to break back in.

The privacy angle here is real too. More stored context means more attack surface for data leakage and prompt injection. Research on LLM security consistently flags this risk. But platforms are pushing forward anyway because the UX benefits outweigh the concerns for most users.

3. Location and Context Are Filtering You Out

Even without a long-term profile, the system is making assumptions based on contextual signals.

Google’s been doing this forever and they’ll straight up tell you that search results vary based on language, location, and other factors. Perplexity does it too, using your location to improve accuracy for things like weather and local business queries.

For SEO, this makes local and geo-specific optimization way more fragmented. The “near me” effect gets amplified in AI answers. If you’re not showing up in the right regional context, you’re invisible to entire user segments even if you’d technically be the best answer.

4. Personalized Retrieval Is The Endgame

This is the big one. The mechanism that actually breaks traditional SEO measurement.

What if the AI retrieves different documents because of who you are? Not just different presentation of the same sources, but fundamentally different sources based on your history, preferences, past clicks, previously accepted answers.

Research on personalized LLMs shows this is absolutely possible. You can use embeddings of user profiles, long-term memory stores, or personalized ranking algorithms to tailor which documents even get considered before the model generates an answer.

If—when—answer engines go this direction at scale, “ranking” stops being a single leaderboard you can check. It becomes thousands of micro-leaderboards. One for each persona. One for each context. One for each user journey pattern.

You can’t just ask “where do we rank?” anymore. The question becomes “which cohorts see us, and which don’t?”

That’s a fundamentally different optimization problem.

Why Everything Won’t Be Hyper-Personalized (Probably)

Before you spiral into panic mode, let’s talk about the forces pushing against extreme personalization. Because it’s not a foregone conclusion that every answer becomes completely unique to each user.

The case for personalization is obvious. Better UX, less repetition, more relevant answers, assistants that feel like they actually know you. OpenAI positions memory and customization as quality-of-life features, and they’re right.

But the case against is strong too.

Privacy and security get exponentially harder with more personalization. Every bit of stored context increases the blast radius when something goes wrong like prompt injection, data leaks, inference attacks. Security researchers are sounding alarms specifically about RAG and agent systems that maintain persistent user state.

Then there’s the fairness problem. Heavy personalization can create filter bubbles that reduce viewpoint diversity and reinforce existing beliefs. Great for confirmation bias, terrible for actually learning anything new or encountering alternate perspectives.

And reproducibility becomes nearly impossible. If answers vary too much user-to-user, how do you audit for correctness? How do you verify claims? How do you hold the system accountable when everyone’s seeing something different?

My bet? We’ll see selective personalization in areas where it clearly improves UX—workflow optimization, format preferences, remembered context but with guardrails in sensitive domains where too much variability creates problems.

Not everything personalized. Not nothing personalized. Somewhere strategic in the middle.

What Changes In Your Actual SEO Work

Enough theory. Let’s talk about what this means for the work you’re doing Monday morning.

You Can’t Measure The Old Way Anymore

Traditional SEO assumes you can check a relatively stable SERP and track your position. That assumption just died.

If two users get materially different recommendations for the same query, what does “average rank” even mean? Not much. Maybe nothing.

You need cohort-based visibility instead. Track by persona, geography, industry, funnel stage. Build test accounts that represent your key audience segments and monitor what each cohort sees over time.

Your SEO reporting is about to shift from “we rank #3 for this keyword” to “we appear in 18% of AI answers for mid-market SaaS buyers in financial services.”

Our prediction: We might witness the closure of various companies that track LLM prompts aka modern keyword tracking tools.

That’s a massive change in how you communicate value to executives. And yeah, it’s going to be an uncomfortable conversation the first time you have it. But it’s the only way to measure what’s actually happening.

Keywords Are Out, Entities and Scenarios Are In

Personalized systems don’t want generic pages that try to be everything to everyone. They want content that cleanly matches a specific context.

Think about how this plays out. The AI knows “I’m a Series A SaaS marketer in fintech with a team of five.” It’s looking for content that speaks directly to that scenario. A generic “marketing tools” page doesn’t cut it anymore.

What wins is crystal-clear entity positioning—who you are, what category you own, what you’re best at. No vagueness. And scenario-based content—”best for small teams,” “enterprise compliance guide,” “under $50K budget” comparison pages.

The content also needs to be retrieval-friendly. Structured, specific, easy to snippet without heavy rewriting. If the model has to work hard to extract value from your page, it’ll just cite someone else.

This is exactly why vague positioning gets crushed in AI search. The model needs a crisp “bucket fit” to know when you’re relevant and when you’re not. Our last issue was exactly on this “SEO x Positioning.”

Brand Compounds Faster (For Better And Worse)

In personalized environments, the AI develops trust priors based on user behavior.

Did your recommendation work last time? You get weighted more heavily next time. Do you show up consistently across sources? The model starts treating you as more authoritative. Does your positioning stay consistent everywhere? That makes you easier to categorize and recommend.

This creates momentum. Once you’re in, you’re more likely to stay in. The user gets familiar with your brand, the AI treats you as a known good option, and the loop reinforces itself.

The flip side? Breaking in gets harder. If you weren’t cited early, you’re fighting uphill against brands that already have that trust prior established.

Brand, distribution, and consistency aren’t buzzwords anymore. They’re literally how personalization algorithms decide who to keep recommending and who to filter out.

You Need Coverage, Not Just One Perfect Page

Stop trying to build one canonical page that ranks for everything. That’s not how personalized systems work.

You need content across the slices that personalization uses. Industries, roles, tool stacks, regions, constraints. “For enterprise” versus “for startups.” “With compliance requirements” versus “move fast and break things.” “North America” versus “EU data residency.”

Alternatives and comparisons too, so you’re eligible when the user’s context implies they’re evaluating trade-offs. If you only have “why we’re great” content, you disappear from consideration sets.

Yeah, this is more work upfront. But it’s how you stay visible across different contexts instead of only appearing for one narrow slice of users and hoping for the best.

Local and Vertical SEO Just Became Your Secret Weapon

If location and context personalization increases, and all signs point to yes then “best option near me” or “in my country” or “for my compliance regime” becomes a default filter.

Google already does this. Their own documentation talks about how personalization and localization affect results. AI answer engines are going the same direction.

For local businesses and vertical-specific tools, this is actually good news. You don’t need to outrank global giants if you can dominate the personalized context that actually matches your market.

A regional agency in Cleveland doesn’t need to beat the big names in New York. They need to own “Cleveland” plus their vertical. A compliance-focused tool doesn’t need to beat generic project management software. They need to own “HIPAA” or “SOC 2” as qualifiers.

Personalization makes the playing field more fragmented. That’s a threat if you’re trying to be everything to everyone. It’s an opportunity if you’re willing to own a specific slice really well.

The Reality You’re Actually Dealing With

So here’s where we land.

Yes, different users get different LLM answers because of real personalization—memory features, profile settings, contextual signals. OpenAI documents this, Perplexity documents this, it’s happening.

But also yes, a ton of what looks like personalization is just normal system variability. Non-determinism, retrieval inconsistencies, A/B tests you don’t know you’re in, API calls, model updates that ship without announcement.

For SEO, the net effect is the same: you’re moving from single-SERP optimization to probabilistic, cohort-based visibility. Entity clarity, scenario coverage, and brand trust signals determine whether you even get considered in the first place.

The old playbook was “rank #1 for the keyword.” The new playbook is “appear in enough of the right contexts for the right personas that your brand becomes the default answer.”

Is that harder? Yeah, absolutely. More variables, more complexity, less certainty, messier reporting.

But it’s also more defensible once you nail it. Personalization creates momentum. Early wins compound. The brands that figure this out now build advantages that are hard to disrupt later.

So the question isn’t whether personalization is coming to AI search. It’s already here. The question is whether you’re adjusting your strategy while there’s still time to build position, or whether you’re going to keep optimizing for a game that doesn’t exist anymore.

Your call.

Signing Out,

Pankaj & Vaishali.